And how Generative-AI is creating new porn

Generative-AI caused a media frenzy when open-source models emerged over the summer, including big players like Open AI’s Dall-E, Stable Diffusion, and Midjourney. With it, a steady influx of billion-dollar startups, entrepreneurs, and venture capitalists rebranded themselves as AI experts. But artists and technology experts continued to raise concerns about generative-AI’s ability to spread misinformation or infringe on artist copyright.

The media storm isn’t just about the platform’s creative capabilities or the possible demise of human art, although that did come up a lot. It was and still is concerned with issues surrounding copyright, ownership, and deepfake fraud.

In September, Getty Images banned AI generative art from its platform. The photo stock agency was concerned with how image generators scrape publicly available and mostly copyrighted content from the internet to produce new imagery. Sampled images are often copyrighted from stock photo websites like Shutterstock, Getty, news sources, or original artworks without credit or compensation to the original creators. A Californian class-action lawsuit has been filed this month against GitHub Copilot for copyright infringement and if it goes against GitHub it will set a legal precedent between creators and tech companies.

US copyright law has fair use boundaries and works created by a non-human are covered under fair use but here’s where it gets foggy. If the work has had human input then it falls under artist copyright protection. Incidentally, fair use is used by commercial companies who hire academics to test their AI technology on public images. It’s a bit sneaky and there’s already a term for it: ‘AI data laundering.’

AI Content Creation

Generative-AI tools have emerged to assist the process of content creation. Advertising, marketing, and any business can use AI to create content and bypass human content producers altogether. It isn’t just text content that AI can muscle in on but the creation of images, audio-visual content, text-to-music, or text-to-video content.

AI tools used in advertising and content marketing can generate targeted and engaging content using algorithms and data analysis tools. The future of advertising with synthetic media is enhanced by curation, production, and the delivery of multimedia creation of personalized experiences for individual consumers.

PicsArt is adding an AI Image Generator and AI Writer to its slate of AI tools to create images and advertising copy. Its text-to-image generators work by entering a word, phrase, or paragraph and the AI system scrapes the internet to create a unique image or text copy. The PicsArt platform is on the iOS App and is designed to support small business marketing teams by making copywriting tools available to anyone. It includes an ad writer, social media bio creator, rephraser, and marketing slogan creator, making creativity accessible to all and increasing productivity, according to the designers.

Imagen Video is Google’s text-to-video AI tool which can create video clips from simple text prompts. Google researchers trained the system with an internal dataset of 14 million videos and 60 million images with 3D object orientation. It’s currently under development under Google’s AI Test Kitchen app feature and will use AI imagery tech to generate hyper-specific images from short text descriptions. Meta’s Make-A-Video also creates unique videos from text prompts. You can see how businesses looking to save a few bucks can add this easy-to-use low-cost technology to their content strategy.

The Future of Work

Generative-AI images use Stable Diffusion models to create text-to-images. Ai and human collaboration models are trained on images scraped from billions of images on the internet, many of which are copyrighted or perhaps even more troubling, scraped from personal family photos and videos. Why has no one mentioned this?

Should we be worried about our jobs? Yes and no. Was I worried when cheap easy-to-use digital photography and video cameras swamped the market, making anyone with a pair of eyes another competitor in the creative industry? Like most professionals, I relied on my strengths, specialized, diversified, and retrained.

Text-to-image AI has captured and captivated the public imagination. Fun, viral, easy to use, and you don’t need to be an artist. And there’s the problem. Artists are having an existential crisis because generative-AI is a real game changer in a lot of crossover industries. In photography, filmmaking, gaming, music, writing, advertising, and marketing. We’re either in deep shit or maybe getting ahead of ourselves. Maybe we are not giving ourselves enough credit for being uniquely creatively human.

AI Porn

Of course, it was only a matter of time before generative-ai was used to produce pornographic material. According to Techcrunch, Unstable Diffusion is monetizing AI porn generators and its operators are building businesses around AI systems tailored to porn. Reddit communities and 4 Chan, generated anime-style nude characters, mostly women of course, and fake nude images of celebrities. A few years ago, deepfakes of celebrities like Taylor Swift have been used to create fake pornographic material. Reddit shut down subreddits dedicated to AI porn and communities like New Grounds, which creates forms of adult art, have banned AI-generative art but despite this, new forums continue to emerge.

Unstable Diffusion host a variety of AI-generated porn preferences and users or creators can invoke the bot to generate art that fits their theme. The platform wants to be an ethical business and says it prohibits child porn, violence, and deepfakes of celebrities but it’s difficult to monitor and there are always ways around it.

Stability AI doesn’t prohibit developers from creating porn, the company places responsibility on the user and not their business model. AI community, text-to-image AI systems are trained on a dataset of billions of captioned images where it learns the associations between written concepts and images.

While many images come from copyrighted sources like Flickr, Stability AI argues their systems are covered by fair use. Discord users must submit images for moderation but as it becomes more widely used, it is difficult to monitor. In 2020, MindGeek lost funding after its site was found creating child porn and sex trafficking videos.

AI-generated porn has negative consequences for artists, adult content actors, and content creators which violates privacy and copyright by feeding sexual content they created to train the algorithm without consent.

Unstable Diffusion grants full ownership of and permission to sell the images they generate. What if your artwork was used to generate pornographic images and videos? Licencing work or allowing artists to preclude their work from training datasets may be the solution.

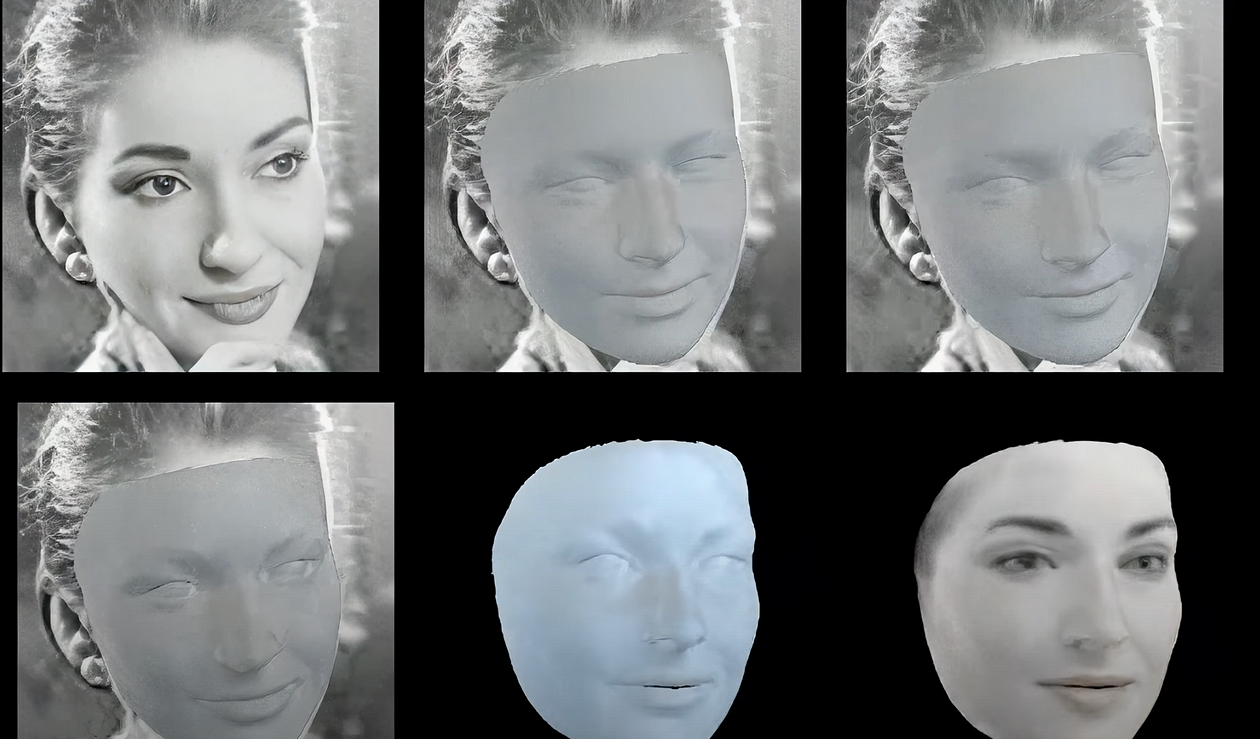

Facial Recognition Deepfake Fraud

Generative-AI deepfake faces could be protected by privacy on social media platforms. Algorithms that generate fake faces could be used to change people’s appearances in photos they don’t want to share. The increasing use of facial recognition AI means it is ripe for deepfake fraud. Platforms allow users to decide if they are tagged in photos. Now there’s a way to stop users from sharing those photos on Facebook or Instagram.

Ginger Liu is the founder of Ginger Media & Entertainment, a Ph.D. Researcher in artificial intelligence and visual arts media, and an author, journalist, artist, and filmmaker. Listen to the Podcast.