President Biden issued an AI executive order to safeguard against AI in multiple industries — including media, security, and creative. The UK is hosting a two-day AI summit with 100 world leaders, academics, and tech industry figures like Elon Musk. The aim of the summit is to build an international consensus on AI. If the world can’t come together on the war between Israel and Hamas, then we can expect the talk to be just talk.

There’s good news with the NO FAKES Act which aims to protect Hollywood actors and singers but it’s bad news for artists prosecuting AI Art generators for copyright liability, but artists fight back with a poisoning tool that stops AI art generators from scraping artist intellectual property.

President Biden Issues AI Safety Order

President Joe Biden has issued an executive order to create safeguards against AI in the case of national security and the recommendation of watermarking AI media creation in photos, video, and audio. Biden stated that deepfakes have the potential to “smear reputations, spread fake news and commit fraud.” Back in July, Biden invited AI companies to voluntarily agree to self-regulation in the absence of AI legislation.

In the next 12 months, North American firms are projected to invest $5.6 billion in generative AI technology that can create images, text, and other media. Biden’s order will impact government contracts, healthcare, life sciences, defense contracts, and on and on. New standards in cybersecurity, data protection, IP, and ethics will roll out and change as fast as new AI technology is created.

Creatives have also welcomed the executive order. The Human Artistry Campaign sets out principles for AI applications that support and protect human creativity and welcome AI safeguards. They issued the following statement in response to Biden’s order:

“The inclusion of copyright and intellectual property protection in the AI Executive Order reflects the importance of the creative community and IP-powered industries to America’s economic and cultural leadership.

NO FAKES Act Aims to Protect Hollywood Artists

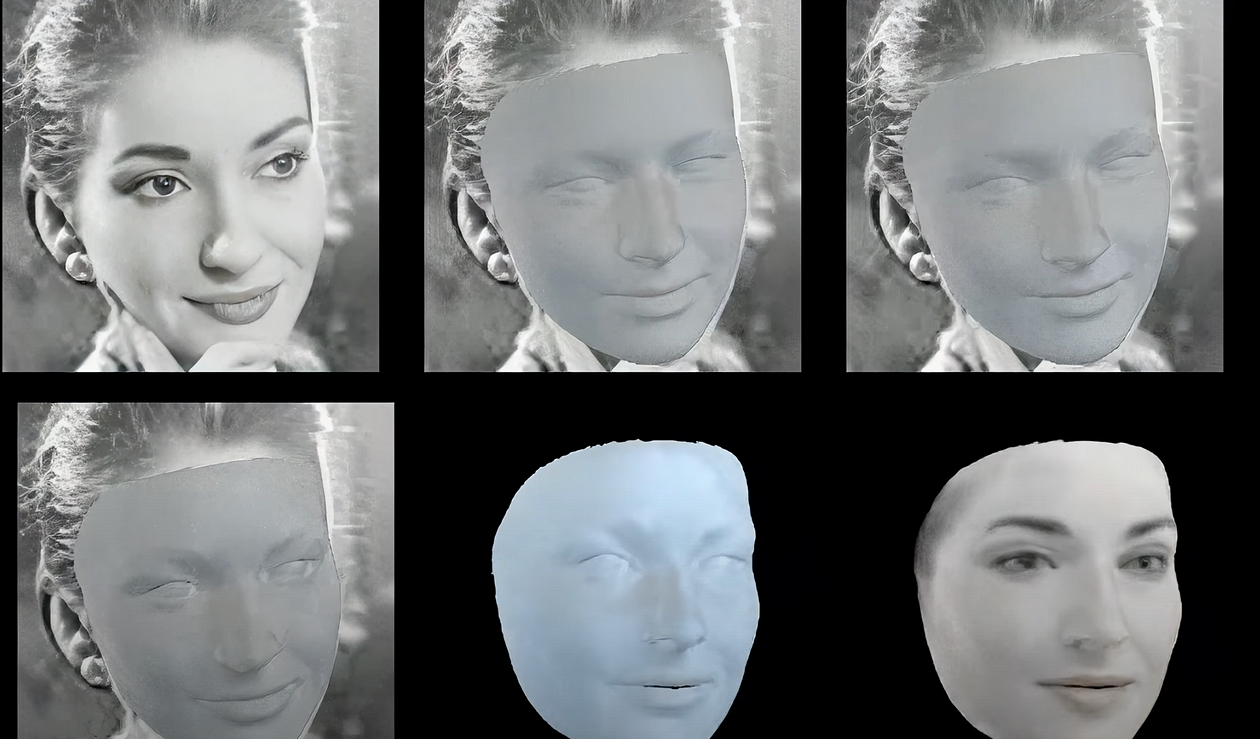

The NO FAKES Act enables artists such as actors and singers to license their digital replica rights to other parties but only if they have legal representation to oversee a written agreement. Liability under the Act arises when the digital replica is produced or published without consent. A digital replica is a computer-generated representation, visual or audio likeness of a person. The replica in these terms is almost indistinguishable from the real thing and therefore could be used in place of a human actor or singer. You can see why this Act is important and what SAG-AFTRA, the writers and actors union, has been fighting for intellectual property rights and the protection of livelihoods.

Other protections under the NO FAKES Act are aimed at internet platforms, websites, and social media outlets which will have to develop ways for policing the reauthorization and dissemination of digital replicas or they risk losing immunity from liability for hosting user-generated content. For example, social media platforms like Twitter and video hosting platforms like YouTube, have mechanisms in place to flag sexual and violent content. YouTube videos are also flagged for music copyright if a user’s video uses an unlicensed soundtrack. It will be interesting to see how deepfakes will be monitored without viewers reporting the content.

Artists Lose AI Art Generator Copyright Case

Copyright infringement claims against DeviantArt and Midjourney have been halted. Artists suing generative AI art generators demanded compensation and permission to use their images which have been downloaded from the internet to train AI systems. The first lawsuit had some success for infringement claims against Stability AI, with a federal judge advancing the claim while dismissing the others.

U.S. District Judge William Orrick concluded that copyright infringement claims against DeviantArt and Midjourney could not be substantiated because of an absence of identical work created by the AI tools. However, the judge allowed the proceedings to continue again with Stability AI because there were enough claims for copyright infringement in its creation of Stable Diffusion. The company has denied copying artists’ work for training its model. Artists will have to prove that their works were used for training purposes, which may prove difficult.

Midjourney is trained by the LAION-5B dataset which scrapes the internet indiscriminately, including copyrighted artworks. Midjourney is fed text prompts and the AI creates a set of composite images. You can see why artists have hired lawyers because we haven’t been asked permission to include our images in the dataset. There’s no compensation or recognition for artists and unfortunately, tech giants have all the money in the world to cover their backs and drag out court cases for years. It’s not just individual artists who taking AI companies to court. Getty Images is suing Stability AI for alleged unlicensed copying of the contents of its photo library and using it to train Stable Diffusion.

Artists fight generative AI with data poisoning tool

A new tool called Nightshade allows artists to make invisible changes to the pixels in artwork before they are uploaded online. If the images are scraped for AI training sets, then the model will break. The tool aims to “poison” training data and damage future image-generating AI models like Stable Diffusion, DALL-E, and Midjourney. The Nightshade attack exploits security vulnerabilities in generative AI models which are trained on huge amounts of data. Poisoned data could mess up how information is produced, such as images of cars instead of balloons. It’s like artist anarchy for the AI generation.

META’s generative AI training data opt-out is bogus

When Meta announced that its AI models were trained on public Facebook and Instagram posts and that the company would provide an “opt-out” from the training data. Wired interviewed a dozen artists who were unable to opt out and instead received “unable to process request” message. Meta argues that there has been confusion about the data deletion request form which, they say, is not an opt-out tool. They go on to say that any data on its platforms can be used for AI training. Meta has not shared which third-party data its models have been trained on but shouldn’t users have a right to protect our data and have a say in how it’s being used?

Is this just another case of big tech sticking a middle finger up to users, businesses, and governments who dare to get in the way of big cash-generating enterprises?

Ginger Liu is the founder of Hollywood’s Ginger Media & Entertainment, a Ph.D. Researcher in artificial intelligence and visual arts media — specifically grief tech, digital afterlife, AI, death and mourning practices, AI and photography, biometrics, security, and policy, and an author, writer, artist photographer, and filmmaker. Listen to the Podcast — The Digital Afterlife of Grief.

test