Grief tech companies think so

Can artificial intelligence recreate our deceased loved ones and will it help or hinder how we grieve? Tech experts and grief therapists have debated the pros and cons of AI but because the technology to replicate the deceased is still in its infancy, the social impact has yet to reach a consensus.

In an interview with CTV News, Richard Khoury, President of the Canadian Artificial Intelligence Association (CAIAC), says that impersonating the dead is possible but don’t expect perfection:

“When it comes to recreating a real person…what will be missing is my memories, my ideas, my personality… It’s not so much an AI problem (but) a model documentation problem.”

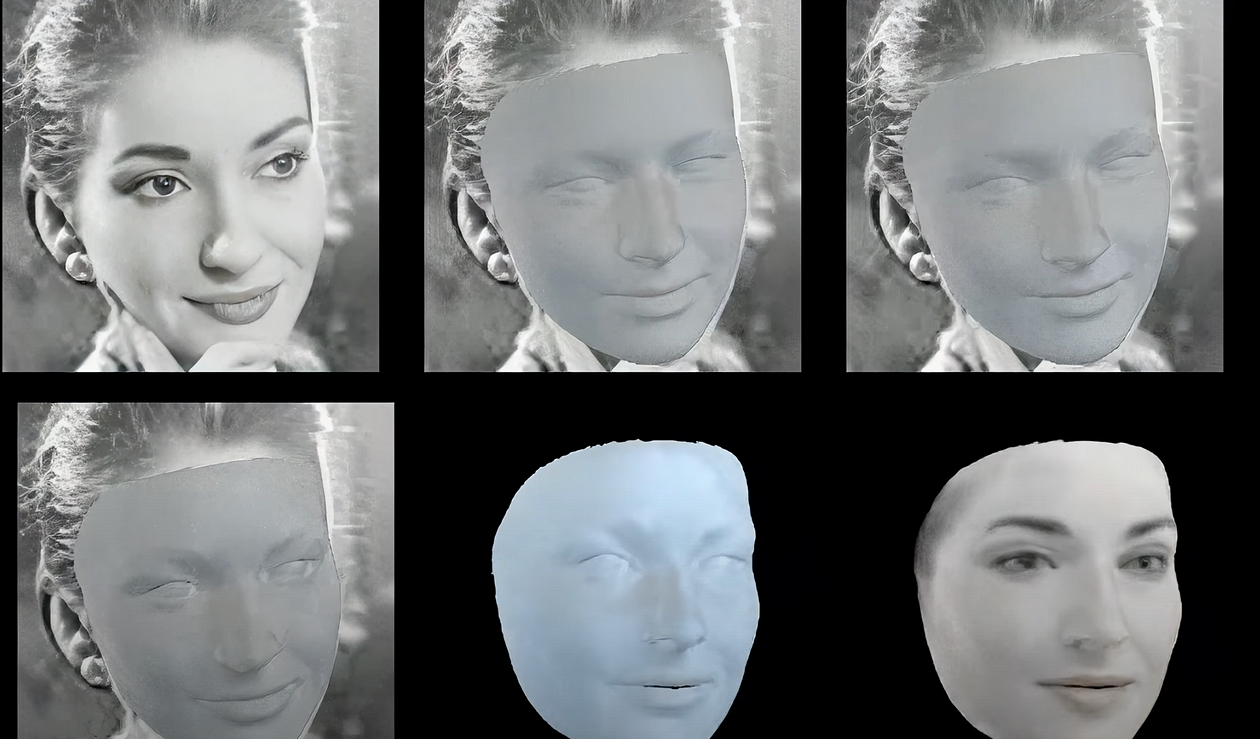

In my research, I have created a persona using transcript data, voice syntheses, and video models. AI wrote my dialogue, copied my voice, and turned a still portrait into a video. The results weren’t perfect but it’s close enough.

AI needs to be trained by people, it gathers data to inform responses and the exact replica of a loved one is impossible but it’s a long way off. AI is unable to separate fact from fiction and because it is human nature to lie, you can see what issues lie ahead. I would have to document every single moment, event, or experience in my entire life in order for the AI to accurately copy me.

According to Khoury, “What we document and what we actually think are very different things… there’s more to us than just our memories, or just our feelings relative to those memories.”

The background or context of a person, her personality and essence, the tech isn’t there yet. Chatboxes are limited to how much context to sort through. AI can only make decisions on the input data and directions from humans. AI could only generate a superficial copy of the deceased. “…to turn a digital copy of someone who you just lost and chat with them as if they were still there and reminisce about old times…it really doesn’t sound like healthy grieving behavior,” says Khoury.

Grieving isn’t linear. I have developed prolonged grief because of my research. I have been looking at old photographs and letters and they have brought up so many memories that I am grieving all over again for my father who died 25 years ago. Perhaps Khouri is right.

How would AI impact the grieving process? Grief therapists aim to get the grief out of someone’s head. Part of a person’s grief process is recognizing their loss, reacting to the separation, recollecting the experience, relinquishing old attachments, and reinvesting their emotional energy elsewhere. Chatbots can help to create dialogue to get that grief out and talk about it.

An organization from the Dutch funeral industry recommends that individuals who want to be represented at their funerals by chatbots or holograms after their passing should indicate this in their wills. However, Brigitte Wieman, the director of the Dutch BGNU, is skeptical:

“Determining whether AI can utilize a person’s data, messages, and images after their death should be subject to certain rules.”

Los Angeles-based company AE Studio has developed a program called Seance AI, which allows the bereaved to talk to a chatbot of their deceased loved one via an app. Product designer and manager, Jarren Rocks told Futurism:

“It’s essentially meant to be a short interaction that can provide a sense of closure. That’s really where the main focus is here… It’s not meant to be something super long-term. In its current state, it’s meant to provide a conversation for closure and emotional processing.”

I will try out Seance AI in a follow-up article.

Ghostbot Scams

Digital reincarnations of the dead or ghostbots could be used in cyber scams. A ghostbot is an artificial persona, such as a chatbot or deepfake, which replicates and imitates facial features, voice, and personality.

According to Dr. Marisa McVey from Queen’s University Belfast:

‘Ghostbots’ lie at the intersection of many different areas of law, such as privacy and property, and yet there remains a lack of protection for the deceased’s personality, privacy, or dignity after death… While it is not thought that ‘ghostbots’ could cause physical harm, the likelihood is that they could cause emotional distress and economic harm, particularly impacting upon the deceased’s loved ones and heirs.”

The UK data protection and privacy laws do not extend to heirs after death, making it unclear who has the power to produce our digital persona.

Ginger Liu is the founder of Ginger Media & Entertainment, a Ph.D. Researcher in artificial intelligence and visual arts media, and an author, journalist, artist, and filmmaker. Listen to the Podcast.