EU Parliament Draft Rules for AI Act

The European Parliament made significant strides in tech policy last week, as it approved its draft rules for the Artificial Intelligence Act. The landmark decision coincided with EU lawmakers filing a new antitrust lawsuit against Google, making it a busy week for tech regulation in Europe. The vote on the AI Act received overwhelming support and experts are calling it one of the most critical developments in AI regulation worldwide.

However, the European legislative process can be complex with the next steps involving members of the European Parliament collaborating with the Council of the European Union and the European Commission to iron out details of the legislation before the draft rules transform into enforceable legislation. Given the variations between the three different drafts from these groups, reaching a compromise could take up to two years.

The recent vote got the approval of the European Parliament’s position for the upcoming final negotiations. Similar to the EU’s Digital Services Act, which establishes a legal framework for online platforms, the AI Act adopts a risk-based approach by imposing restrictions based on the perceived level of risk associated with an AI application. Businesses will be required to submit their own risk assessments regarding their use of AI.

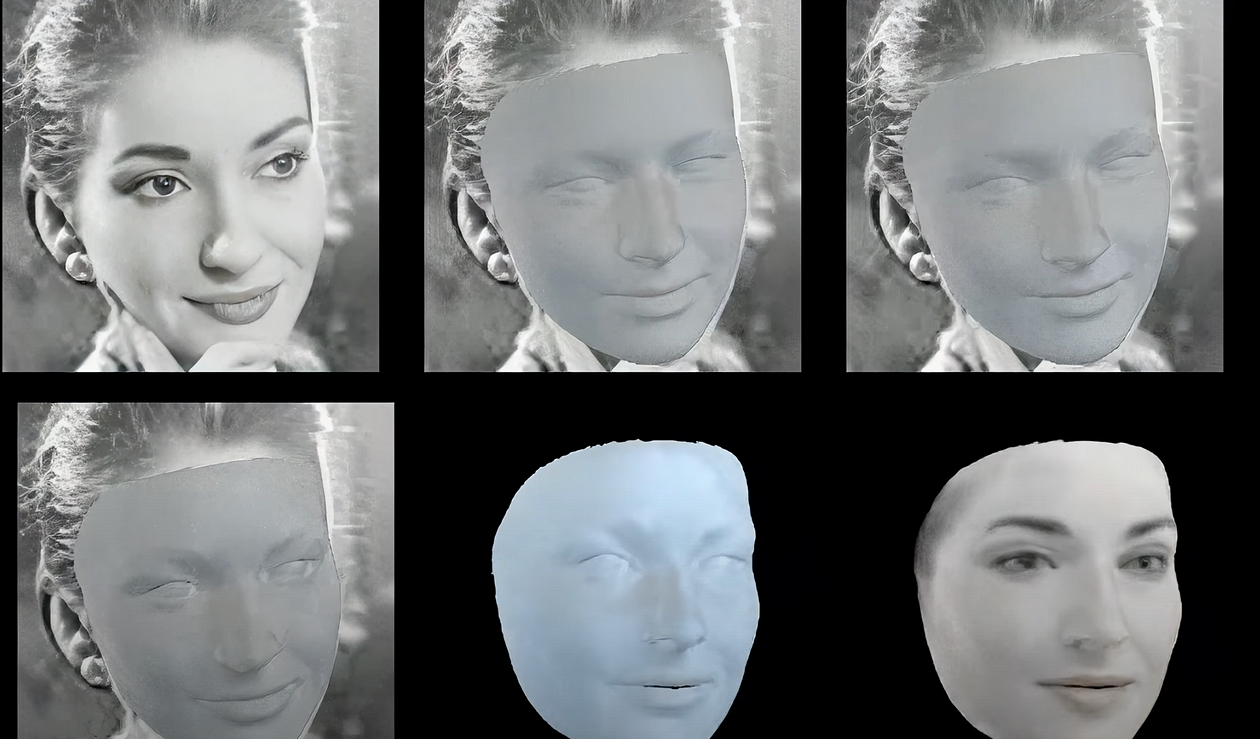

The implications of the AI Act are far-reaching and include several notable measures. Firstly, the European Parliament’s draft text introduces a ban on emotion-recognition AI in policing, schools, and workplaces. While developers argue that AI can identify when a student struggles to comprehend certain material or when a driver may be falling asleep, the accuracy and biases of emotion recognition software have drawn criticism. Notably, the draft text does not include a ban on facial detection and analysis, potentially indicating a future political battle on the topic.

Another significant proposal is the ban on real-time biometrics and predictive policing in public spaces. This is expected to spark substantial debate as EU bodies navigate the enforcement of such a ban in legislation. Advocates for modern policing argue against the prohibition of real-time biometric technologies, emphasizing their necessity. Some countries like France, plan to expand their use of facial recognition.

The draft text of the AI Act also seeks to outlaw social scoring, a practice involving public agencies using data on individuals’ social behavior to form generalizations and profiles. However, social scoring is complex as it is used in determining things like mortgage eligibility, setting insurance rates, and making hiring and advertising decisions. The practice is not banned in the draft text from the other EU institutions, indicating potential challenges in reaching a consensus.

Additionally, the draft proposes new restrictions for generative AI and advocates for the prohibition of copyrighted material in the training sets of large language models, such as OpenAI’s GPT-4. European lawmakers have already expressed concerns about data privacy and copyright related to OpenAI, and the draft bill further mandates that AI-generated content must be clearly labeled as such. The European Parliament will now need to get support from the European Commission and individual countries, all likely to face lobbying pressure from the tech industry.

Another important detail from the AI Act is the increased scrutiny placed on recommendation algorithms used in social media platforms. The new draft categorizes recommender systems as high-risk, which marks a significant escalation compared to previous proposals. If enacted, this would subject recommender systems on social media platforms to more rigorous scrutiny regarding their functioning, potentially making tech companies more accountable for the impact of user-generated content.

Margrethe Vestager, executive vice president of the EU Commission describes the risks of AI as widespread and is cautious about the future of trust in media information, social manipulation by bad actors, and overwhelming mass surveillance.

Ginger Liu is the founder of Ginger Media & Entertainment, a Ph.D. Researcher in artificial intelligence and visual arts media, and an author, journalist, artist, and filmmaker. Listen to the Podcast.