Death tech conversational video platforms, commercial AI video, and generative AI art that creates a mix of races

I’ve been playing around with generative AI conversational video platforms by death tech companies, business services, and generative AI digital art to test their ability to create something similar to the essence of me and my identity. These platforms have limitations because their services are designed for the masses so aesthetics and content can seem pretty generic.

I’m still trying to get my head around why I would pay a subscription service to record conversations with my elderly relative when I can easily just stick a cell phone in front of my Great Aunt Betty and video her answers to my questions. But hey, that’s capitalism.

HeyGen

First up is HeyGen which creates business videos with generative AI. Users can easily create an avatar with voice, and feed it text and the result is an impressive addition to PowerPoint presentations. Or you can add your own image, as I did. Check out my video below with the corporate jingle and generic American accent. I created the text with ChatGPT.

Businesses never need to hire video production companies ever again so expect a lot of job losses in that field, including actors.https://cdn.embedly.com/widgets/media.html?src=https%3A%2F%2Fwww.youtube.com%2Fembed%2FSfix9Y3nVEI%3Ffeature%3Doembed&display_name=YouTube&url=https%3A%2F%2Fwww.youtube.com%2Fwatch%3Fv%3DSfix9Y3nVEI&image=https%3A%2F%2Fi.ytimg.com%2Fvi%2FSfix9Y3nVEI%2Fhqdefault.jpg&key=a19fcc184b9711e1b4764040d3dc5c07&type=text%2Fhtml&schema=youtubeHey Gen

Storyfile

Death tech company StoryFile is based in Los Angeles and was founded by CEO Stephen Smith. If their AI looks familiar it’s because the same company was responsible for the interactive holograms of Holocaust survivors at the USC Shoah Foundation. Smith’s mother, Marina Smith passed away in June last year and he attended her funeral in Nottingham, England. The 87-year-old Mrs. Smith MBE was a lifelong holocaust campaigner and her family wished that her message and education continued after her death.

At her funeral, Mrs. Smith was projected on a video screen where she answered questions from her mourners. Her answers were prerecorded while she was still alive and the data was fed into StoryFile. There are about 75 answers to a bank of 250,000 potential questions and each video answer is around two minutes. The AI system selects the appropriate clips to play in response to questions from people viewing the video. The deceased in the video appears to listen and reply. The answers are in real-time and Mrs. Smith is seen pausing and listening to questions to give the elusion of a live conversation.

I was impressed with the AI video avatar of William Shatner who is promoted on their website. When I came to record my own conversational video, I was less impressed. Answering thirty questions about various episodes in my life was time-consuming and boring, which comes across in some of my lackluster answers. I can’t image a 90-year-old loving this.

Although I do have a green screen and studio lights which I could have used to jazz up the presentation, I wanted to be just an average Josephine who doesn’t just happen to have a photography studio and equipment. I’m not going to pull any punches. I look like crap. Is this the legacy I want to pass on to the next generation?https://cdn.embedly.com/widgets/media.html?src=https%3A%2F%2Fwww.youtube.com%2Fembed%2FXLcoyEvTo04%3Ffeature%3Doembed&display_name=YouTube&url=https%3A%2F%2Fwww.youtube.com%2Fwatch%3Fv%3DXLcoyEvTo04&image=https%3A%2F%2Fi.ytimg.com%2Fvi%2FXLcoyEvTo04%2Fhqdefault.jpg&key=a19fcc184b9711e1b4764040d3dc5c07&type=text%2Fhtml&schema=youtubeStoryfile

Hereafter AI

HereAfter AI’s interviewers ask a series of questions and the answers are accessed through a smartphone or speaker app by a chosen family member. I upgraded to the paid version and the questions seemed to go on for an eternity.

After I answered and recorded a question, I was asked to record a specific prompt that will be used when my bot is activated. Using the American term “sure” instead of “okay” sounded ridiculous after the tenth time of hearing it because my bot had problems answering my questions so defaulted on “sure.” Every so often, it would blurt out “sure,” a term, despite my American heritage, that I never use. Do I have to upgrade to get a better service?

I also couldn’t connect my bot to my smartphone or Alexa. My recordings do not hide the tedium from answering multiple boring questions. I would much rather have a book of stories with pictures than this Alexa avatar. I’m not sure I got a sense of the real me, that essence I was talking about. Maybe portrait photography still has the lead on that.https://cdn.embedly.com/widgets/media.html?src=https%3A%2F%2Fwww.youtube.com%2Fembed%2FMZJ9N8EVsvs%3Ffeature%3Doembed&display_name=YouTube&url=https%3A%2F%2Fwww.youtube.com%2Fwatch%3Fv%3DMZJ9N8EVsvs&image=https%3A%2F%2Fi.ytimg.com%2Fvi%2FMZJ9N8EVsvs%2Fhqdefault.jpg&key=a19fcc184b9711e1b4764040d3dc5c07&type=text%2Fhtml&schema=youtubeHereafter AI

Live Story from MyHeritage

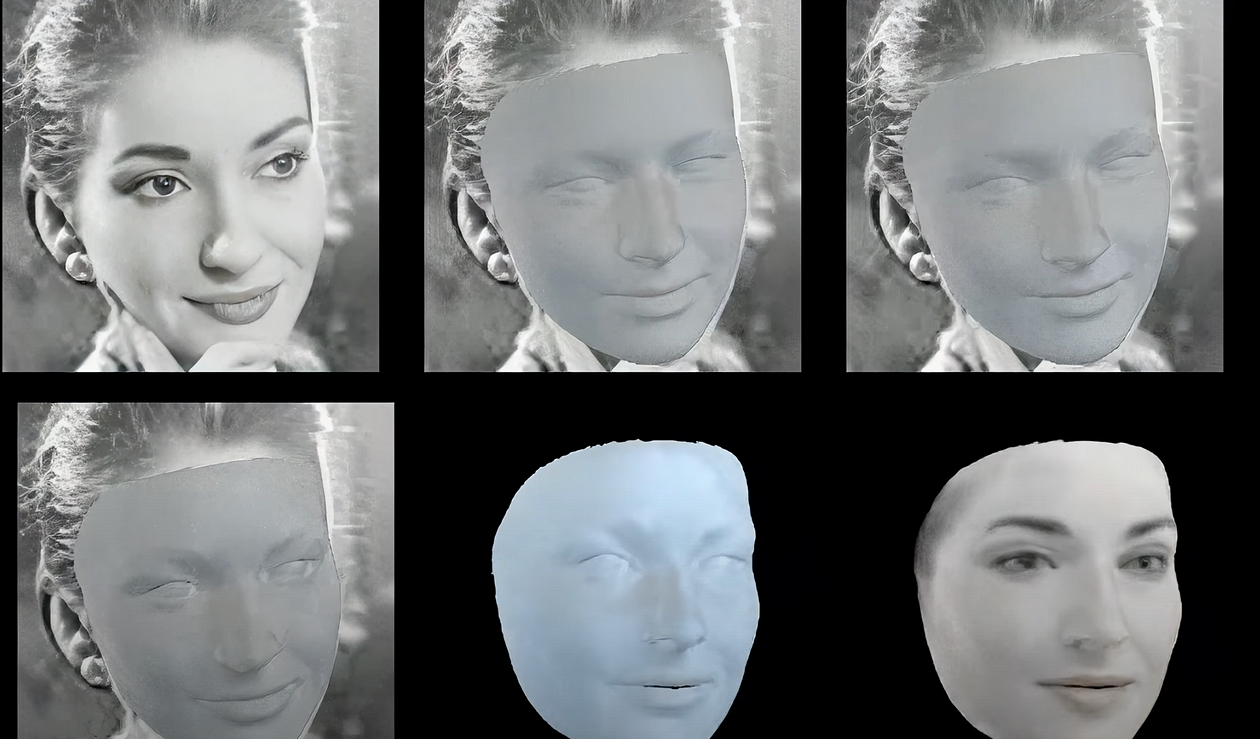

I have a soft spot for MyHeritage’s Deep Nostalgia because when it first came out it was incredible to animate family photographs and what the AI could do resonated around the world. We uploaded old pictures of our deceased loved ones and imagined for a moment through the animation of their facial features, that they were present and part of our lives once more. This was before things started going crazy about AI taking over our jobs and the world.

How does Deep Nostalgia work? Facial recognition company D-ID created Deep Nostalgia for MyHeritage and uses GANs to animate photographs. A GAN is produced by two AI systems working together. One system creates a copy of an image using new data, and the other system determines if the data is real or fake.

AI-animated renderings are created from photographs of human faces. It uses deep learning algorithms to predict missing parts in a photograph, such as teeth and ears and creates an automated sequence of gestures and movements, like smiling, blinking, and head-turning.

Re-animation technology is challenging the definition of what a photograph is but it hasn’t quite matched who I am, yet. That’s where deep fakes come in but that’s another story.

Stability-AI

Okay, so technically not a conversational video platform but I wanted to see what images it would come up with when I fed it my racial heritage. Generative AI has had a bad rap for scraping the internet to recreate predominately white and male images.

Prompt 1 — “The true essence of a person who is a woman artist with red hair and mixed race of English, Chinese, and Irish and who was born in Los Angeles, America.”

As you can see below, it comes up with a combination of these races from 100% Asian or European to a mix, which is what I and many of us are. There’s also a stereotypical American look and an Irish look.

My father saved a newspaper article clipping about what an American would look like in 2034. An updated version came out in 2013 for National Geographic. An image was created that mixed multiple races to create the face of America. This image and story reflected our own cultural and racial background. A mix of Chinese, English, Irish, and American.Visualizing Race, Identity, and ChangeA feature in National Geographic’s October 125th anniversary issue looks at the changing face of America in an article…www.nationalgeographic.com

Prompt 2 — “An American British woman with red hair who is quarter Chinese, quarter Irish, and English.”

This second prompt wasn’t as successful as the first. The images are cartoonish but I can see that there is a mix of races.

Can an AI avatar create the true essence of a person who is of multiple races and ethnicities and convey the importance of these identities within an AI persona? What do you think?

My Ph.D. research aims to create the essence of myself using AI and the visual arts, and I want to find out if AI can replace portrait photography in creating the true essence of a person. The history of portrait photography starts in the studio on the tails of portrait painting and these artists were on a mission to create the essence of the sitter. Maybe photography has always been limited because it can only create a still image and it’s quite a challenge to convey who someone really is in a single photograph.

Can AI create a multidimensional persona that accurately reflects the uniqueness in each and every one of us? That’s what I’m trying to figure out.

Ginger Liu is the founder of Ginger Media & Entertainment, a Ph.D. Researcher in artificial intelligence and visual arts media, and an author, journalist, artist, and filmmaker. Listen to the Podcast.