Exploring the Intersection of AI and Mourning

In 2020, a young Chinese software engineer named Yu Jialin stumbled upon an essay about lip-syncing technology that detailed synchronized lip movements with recorded speech. The concept of a grief bot provided an opportunity to communicate his final words to the man who played a significant role in his upbringing.

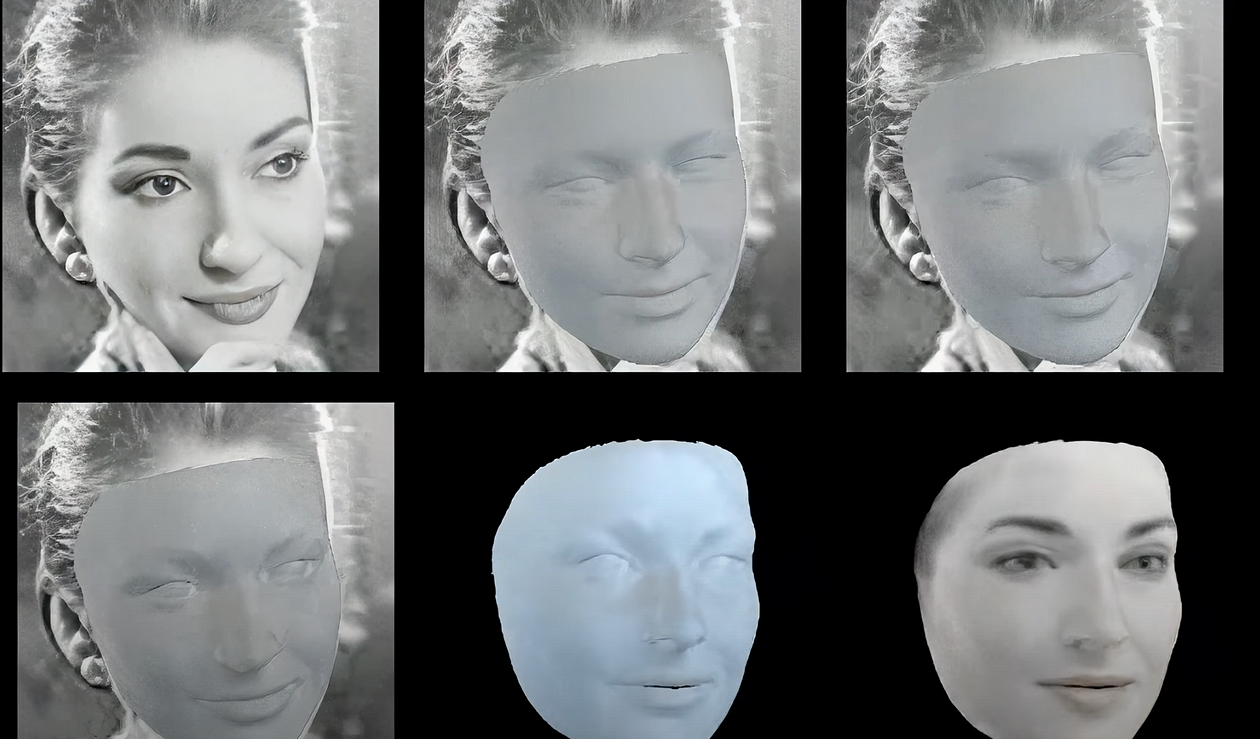

Yu recreated his grandfather’s appearance, speech, and mannerisms from family photographs, videos, letters, and phone messages; information he then used to train an AI model about his grandfather’s personality. Initially, the bot’s responses were generic, but as Yu provided more information, it began displaying more accurate representations of his grandfather’s habits and preferences.

Yu’s ambition to recreate his grandfather is one example of a growing trend in China, where the bereaved are using artificial intelligence to resurrect the dead. The rise of grief bots in China coincides with the popularity of Xiaoice, a chatbot assistant that embodies the persona of a teenage girl and has more than 660 million users. In the United States, death tech companies have developed similar grief bots like Replika, which is now marketed as a social AI app. In Canada, Joshua Barbeau used an older program called Project December to digitally recreate his deceased girlfriend.

While grief bots can provide potential benefits for the bereaved, they also raise ethical concerns. There is a risk of data and deep fake fraud and obtaining consent from the dead and their relatives presents significant challenges. Death tech companies like HereAfter.AI provide an opt-in for individuals to upload their personalities online but for many companies consent from the deceased is often missing. The use of personal information without explicit permission raises privacy and ethical issues, even among immediate family members.

While grief bots offer a virtual presence and the ability to communicate with the deceased, they can also interfere with and limit control over the grieving process. In traditional mourning, we can store away old photographs and letters in boxes along with the memories that can trigger grief. But grief bots can tempt the bereaved to communicate whenever they look at their smartphones.

Months after its creation, Yu made the decision to delete his grandfather’s grief bot after he was becoming overly reliant on AI for emotional support. It is hoped that the majority of mourners eventually let their grief bots fade out when the support is no longer needed. However, there is a risk the damage of prolonged use can cause.

Ginger Liu is the founder of Ginger Media & Entertainment, a Ph.D. Researcher in artificial intelligence and visual arts media, and an author, journalist, artist, and filmmaker. Listen to the Podcast.