Disney creates AI task force, Google disrupts publishers’ copyright, Zoom caught using customer data, Dungeons & Dragons bans AI-generated art

AI Companies Commit to New Safety Measures for Biden

The biggest companies in AI technology have committed new safety measures to the White House, such as watermarking AI-generated content. The voluntary commitment from Meta, Amazon, Open AI, Alphabet GOOGL.O, and others has been welcomed by the Biden administration. Other measures included investing in cybersecurity to reduce risks and to test systems before opening them onto the market. This all seems like common sense but the last year has seen AI companies compete to release new products without much thinking of the blowback.

Dungeons & Dragons Publisher Bans AI-Generated Artwork

Hasbro-owned D&D Beyond and its subsidiary Wizards of the Coast, recently discovered that an illustrator had used AI to create commissioned artwork for an upcoming book. Californian artist, Ilya Shkipin has worked with the publishers for almost a decade and defended the use of artificial intelligence in his work in a recent Twitter post. Fans noticed that the artwork Shkipin produced looked suspiciously AI-generated and voiced their concerns on social media. This led the publishers to issue a statement on Twitter stating that they had approached the artist for clarification and going forward, “He will not use AI for Wizards’ work moving forward. We are revising our process and updating our artist guidelines to make clear that artists must refrain from using AI art generation as part of their art creation process for developing D&D.”

Disney Creates Task Force to Study Artificial Intelligence

Disney is implementing strategies to push artificial intelligence across the company. Disney is reportedly looking to “develop AI applications in-house as well as form partnerships with startups.” Although the initiative was launched earlier this year before the Hollywood writers and actors strike, it has caused the industry to be cautious about what lies ahead. Disney has posted eleven jobs across all corners of the business which require artificial intelligence and machine learning skills.

PhotoGuard Developed to Protect Against AI Image Manipulation

Researchers from MIT’s Computer Science and Artificial Intelligence Laboratory have developed PhotoGuard to prevent unauthorized image manipulation and to ensure authenticity in a world of deepfakes. Generative models such as Midjourney and DALL-E have made the creation of hyper-realistic images open to the unskilled. Complex images can be created from text prompts that can alter original images, which can pose security threats. Watermarking images has been suggested by government agencies but it doesn’t go far enough to safeguard image data. PhotoGuard uses perturbations, or minuscule alterations in pixel values that can disrupt an AI model’s ability to manipulate and change an image.

Google Use AI Systems to Scrape Publishers’ Work

Google aims to mine publishers’ content through their generative artificial systems and will give authors the opportunity to opt out of the process. The company has yet to go into the details of how this opt-out will be set up but its proposal is set to disrupt copyright law and damage smaller content creators. Google’s submission to the Australian government’s AI regulatory framework review, stated that copyright law should be altered to allow generative AI systems to freely scrape the internet.

Documentary Filmmakers Cautious About Use of AI

Documentary filmmakers Andrew Rossi and Dawn Porter have expressed concerns about the ethical use of artificial intelligence in non-fiction work that is bound by standards of truth and integrity. Filmmakers have been using AI for years to transcribe interviews and recently, Midjourney and ChatGPT have helped editors create spreadsheets and extract metadata. But recent advancements in AI can create fake photographs and deepfake videos and audio. “We are supposed to be the truth, and it might be the truth as we see it, but we are also supposed to be transparent,” says Dawn Porter. “I’m very nervous that people are not going to be transparent about what techniques they are using and why.”

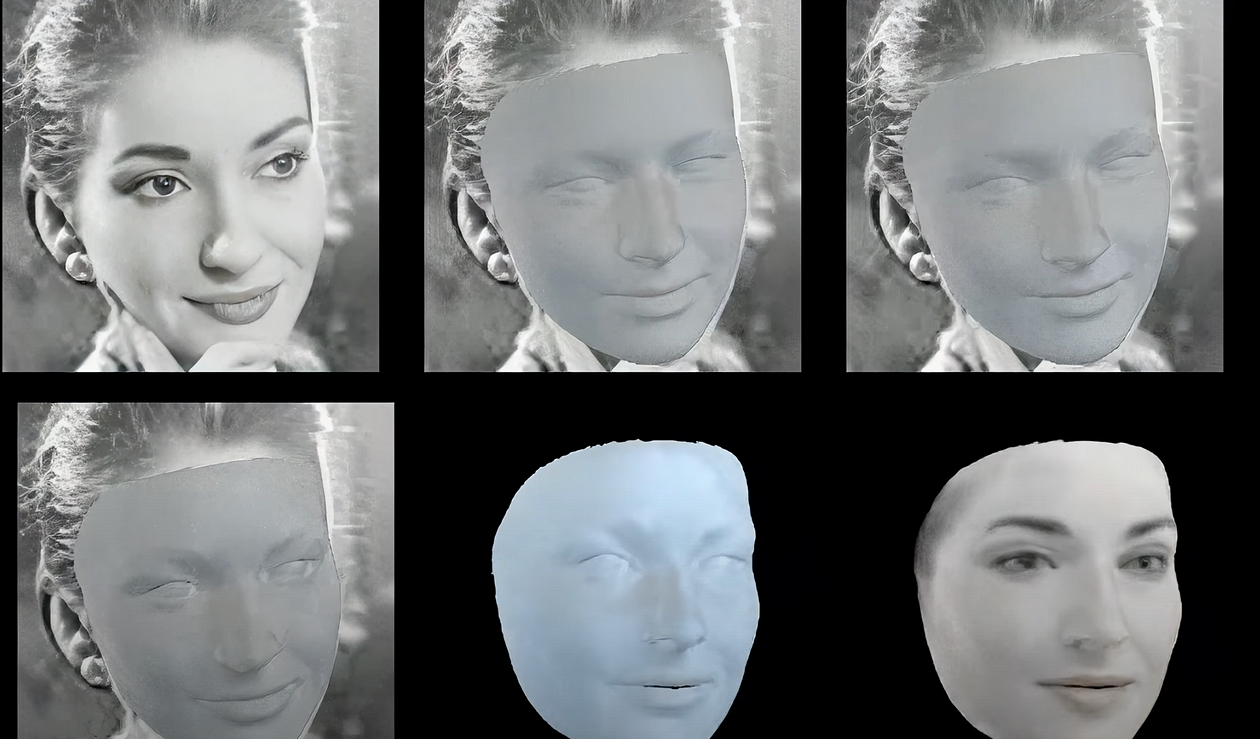

Itseez3D Releases Avatar SDK Deep Fake Detector

3D graphics and digital identity company Itseez3D launched the Avatar SDK to support user identity protection. The deep fake detector platform aims to enable businesses to strengthen user security and application integrity. Avatar SDK Deep Fake Detector was launched in response to the rise of deepfake technology and wider security concerns. Verifying user identities is an ongoing battle for developing trustworthy applications and safeguarding the authenticity of user identities. The platform used machine learning algorithms to analyze facial features which can identify synthetic 3D avatars.

Kickstarter Projects Require Generative AI Disclosure

Kickstarter has announced that projects on its platform using AI tools to generate text, images, music, or audio will require disclosure on the project pages. Details will need to include if the project will use AI-created content and distinguish between which elements are AI or original. The project owner will also have to declare how sources handle consent and credit. AI tools such as ChatGPT and Stable Diffusion were trained on public images and text scraped from the web without credit or compensation to photographers, writers, and artists. Kickstarter aims to implement its own opt-out safeguard terms for content creators.

Zoom in Legal Woes for Using Customer Date for Training AI

The videoconferencing platform could be heading to court in Europe over its privacy statement. In March 2023, Zoom added a clause in its terms & conditions which was subsequently picked up by Hacker News who claimed that it allowed Zoom to use customer data to train AI models without an opt-out.

Latest Gartner Survey Finds Generative AI is a Risk for Businesses

Gartner surveyed 249 enterprise risk executives about the potential risks generative AI pose to businesses. Gartner’s Quarterly Emerging Risk Report includes detailed information on impact, attention, time frame, and opportunities for perceived risks. Adequate financial planning, third-party viability, and cloud concentration were major concerns, as well as trade tensions with China. Cybersecurity, data privacy, and intellectual property risks from generative AI were covered in the survey.

Allen Ginsberg’s Poetry Recreated with Artificial Intelligence

The Fahey/Klein Gallery in Los Angeles is exhibiting generative AI poems created from Allen Ginsberg’s private collection of photographs. The poems are generated using an AI-powered camera that turns visual images into text. The exhibition, Muses & Self, is a collection of photographs from the Ginsberg estate, together with the AI-generated A Picture of My Mind: Poems Written by Allen Ginsberg’s Photographs. The exhibition is in collaboration with the NFT poetry gallery, TheVERSEverse, and the Tezos Foundation.

Ginger Liu is the founder of Hollywood’s Ginger Media & Entertainment, a Ph.D. Researcher in artificial intelligence and visual arts media — specifically grief tech, digital afterlife, AI, death and mourning practices, AI and photography, biometrics, security, and policy, and an author, writer, artist photographer, and filmmaker. Listen to the Podcast — The Digital Afterlife of Grief.