How the EU AI Act is Leading the Way

Sophisticated deepfakes designed to mimic individuals within organizations have made the headlines recently. A UK-based firm lost $243,000 when fraudsters created a deepfake to replicate the voice of its CEO and ordered an employee to wire transfer the money. Another company fell victim to a deep voice attack that copied the voice of the company’s director, resulting in a staggering $35 million loss. The Chief Compliance Officer of crypto company Binance suffered a deepfake hack involving a sophisticated hacking team. Found video footage of the CTO was used to create an AI hologram that was so convincing that it was able to convince the crypto community to join fraudulent meetings. And there are too many stories to mention about everyday people who have been swindled by replicas of familiar voices.

Accenture’s Cyber Threat Intelligence (ACTI) warns that while some deepfakes can seem crude, the trend in deepfake technology is towards greater sophistication at a reduced cost, which means potentially anyone can create a convincing deepfake to cheat the unsuspected.

Over the past two years, ACTI has found significant changes in deepfake technology. Between January and December 2021, much of underground fraud revolved around cryptocurrency scams and gaining unauthorized access to crypto accounts through deepfake replicas.

However, from January to November 2022, the trend shifted to a more sinister focus which saw deepfake technology infiltrating corporate networks. Underground forums saw a substantial rise in chats related to this method of attack, with its intent to bypass security measures. This change highlights the shift from crude crypto-based schemes to sophisticated tactics aimed at breaching corporate networks, bypassing security measures, and amplifying existing techniques employed by numerous threat actors.

ACTI believes that the changing nature and usage of deepfakes are partly fueled by advancements in artificial intelligence. The ease of accessible hardware, software, and data necessary for creating convincing deepfakes has made the process more widespread, user-friendly, and cost-effective. Some professional services now offer deepfake platforms for as little as $40 per month.

Preemptive Strategies

To prevent cyber fraud created by deepfakes, ACTI recommends incorporating deepfake and phishing training into employee education programs. Ideally, all employees should undergo this training to enhance their awareness and understanding of these threats. Organizations should establish standard operating procedures for employees to follow if they receive an internal or external message containing a deepfake. Additionally, continuous monitoring of the internet for potential harmful deepfakes through automated searches and alerts can aid in early detection and response.

The Impact of AI on Cybersecurity

The business world has been quick to embrace the advantages of AI. For example, software engineers are streamlining efficiency by using AI-based code creation accelerators like Copilot. However, these tools can also expedite the process for cyber attackers, reducing the time required to exploit vulnerabilities. One specific concern is data leakage, where crucial intellectual property of a developer’s company could be exposed as the AI learns from the shared code.

The proliferation of generative AI chatbots has also caused concern for cybersecurity, such as fraudulent websites posing as ChatGPT platforms to obtain sensitive information.

The reliance on generated code presents the risk of deploying vulnerable code and creating more security vulnerabilities. A recent study conducted by NYU revealed that approximately 40% of Copilot-generated code contained common vulnerabilities. The latest generation of AI chatbots can impersonate humans on a huge scale, which presents a significant advantage for cybercriminals.

Previously, attackers had to choose between a broad and shallow approach, targeting numerous potential victims via phishing, or a more sophisticated narrow, and deep strategy that focused on a few or even a single target with personalized impersonation, called spear phishing. However, AI chatbots can impersonate humans, whether through chat interactions or personalized emails, at an increased scale. In response, security measures will likely employ other forms of AI such as deep learning classifiers. AI-powered detectors for identifying manipulated images already exist.

Enhancing Cyber Defense

The time taken to identify and contain data breaches has been significantly reduced with AI cybersecurity technology. Traditional breach containment averaged around 277 days, but with AI and automation, breaches can be resolved within 200 days, resulting in an average cost savings of $1.12 million. Organizations using AI and automation reported even greater savings, averaging $3 million.

AI can automate tasks and analyze large and complex datasets. With the emergence of cybersecurity-specific products using large language models, companies can automate threat assessments and faster analysis of potential malware. While challenges such as data quality persist, AI can play a crucial role in enhancing threat detection and response.

Strengthening National Security Systems

AI and machine learning has the potential to strengthen systems essential to national security. Recent hearings in the Senate Armed Services Committee highlighted the vulnerability of major weapons systems to cyberattacks. Simple tools and techniques can compromise these systems, often operating undetected. Even if weapon systems were uniformly advanced in terms of security, AI would still provide substantial benefits. Its implementation can mitigate risks, enhance defense capabilities, and provide operational advantages.

Enhancing National Security

AI’s impact on national security extends beyond the United States. The Annual Threat Assessment of the Intelligence Community emphasized China’s ambition to become the primary AI innovation center by 2030, investing heavily in AI and big data analytics. AI is a critical tool for enhancing national security, countering emerging threats, and maintaining a competitive advantage in an increasingly complex global landscape.

While concerns surrounding privacy and security in AI development should not be ignored, it is essential to acknowledge the substantial benefits that AI, machine learning, and large language models bring to cybersecurity and national security. Policymakers should focus on using AI effectively, establishing proactive regulations, and fostering international collaboration.

AI Regulation

OpenAI CEO, Sam Altman, recently urged United States Congress to regulate the technology he and others had created for consumers. However, despite initiatives such as the AI Bill of Rights and frameworks proposed by Senate Majority Leader Chuck Schumer, there is a lack of comprehensive legislation. Much of federal policy has been advisory in nature, with limited impact beyond state and local levels. Whether this fragmented approach will address the impact of AI on the information economy, employment, and society, is still unknown.

European Union

The European Parliament has proposed the implementation of the European Union Artificial Intelligence Act which suggests tiered regulations based on AI system threat levels. While these policy ideas can inform global regulatory concerns, the AI Act could create tighter regulations for smaller businesses. A clear example is the General Data Protection Regulation (GDPR) which has had complex regulations favoring big tech companies, leaving smaller companies struggling to adhere to the law. The success and failure of the GDPR need to be considered when implementing regulation for AI for local, national, and global enterprises.

The European Parliament’s recent vote marks a significant milestone in the fight to regulate AI and what could be unknown territory. With the emergence of chatbots like ChatGPT and deepfake technology, the need for AI guidelines has become urgent.

The EU’s Artificial Intelligence Act has some key guidelines and implications. First proposed in 2021, the EU’s Artificial Intelligence Act is designed to govern any product or service that uses AI technology.

Enforcement and Penalties

Enforcement of the regulations will be the responsibility of the EU’s 27 member states. Regulators will have the authority to force companies to withdraw their AI applications from the market if they fail to comply. In severe cases, violations may result in fines of up to 40 million euros ($43 million) or 7% of a company’s annual global revenue.

Mitigating Risks

The primary objective of the AI Act is to safeguard people’s well-being as well as protect individuals and businesses from AI-related threats. For example, the act prohibits social scoring systems that assess individuals based on their behavior. Additionally, AI systems that exploit vulnerable individuals, including children, or employ subliminal manipulation, such as interactive toys promoting dangerous behavior, are strictly forbidden. Predictive policing tools, which leverage data to forecast potential crimes, are also prohibited.

Changes to the Original Proposal

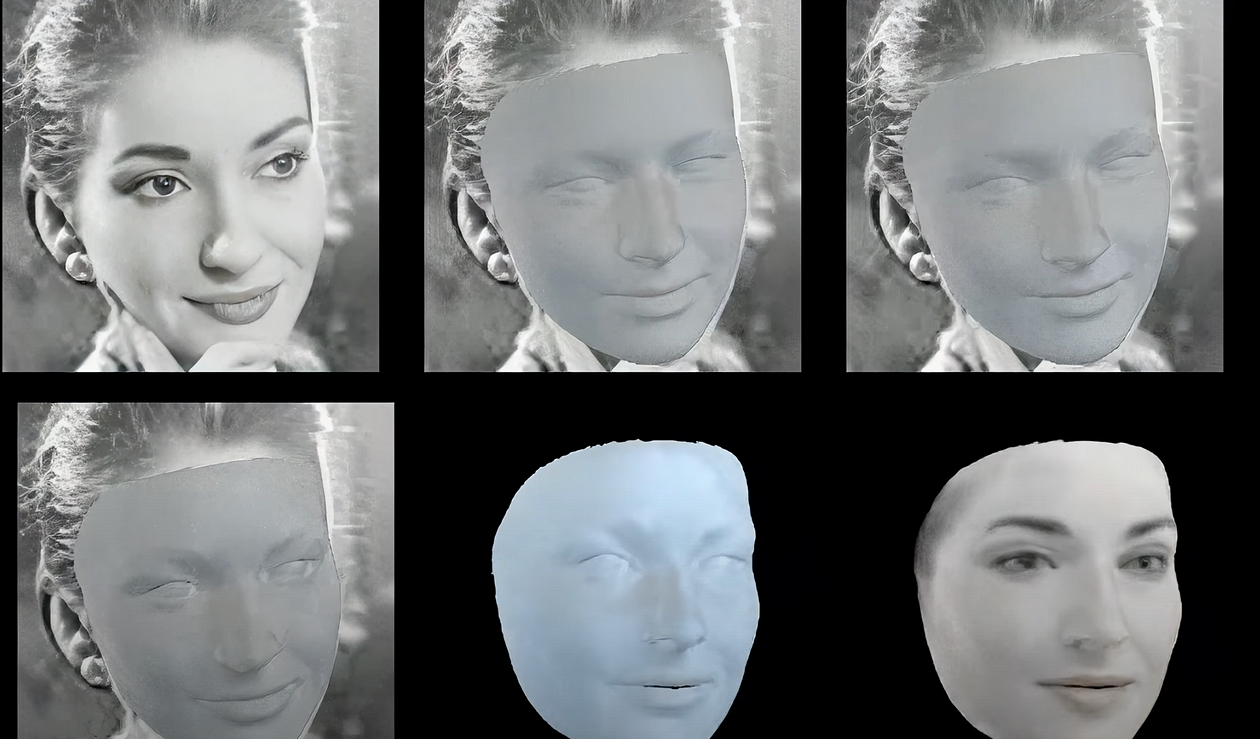

Lawmakers expanded upon the European Commission’s initial proposal by extending the ban on real-time remote facial recognition and biometric identification in public spaces. Facial recognition AI is used to scan individuals and match their faces or physical traits with databases. This technology was used extensively in London during King Charles’s coronation in May.

Requirements for High-Risk AI Systems

AI systems used for hiring and admissions practices such as employment and education, must meet rigorous standards, such as transparency in the execution, measures to assess and mitigate algorithmic bias, and steps to reduce risks associated with these systems. The European Commission emphasizes that the majority of AI systems, such as video games or spam filters, fall into the low- or no-risk category.

Inclusion of ChatGPT and Copyright Protection

While the initial proposal had minimal mention of chatbots, there was a consensus that the popularity of general-purpose AI systems like ChatGPT expanded the regulations to cover such technologies. Notably, the act now includes a provision that mandates thorough documentation of any copyrighted material used to train AI systems to generate text, images, video, or music that resembles human work. This requirement enables content creators to ascertain if their work has been used without permission and take appropriate action.

The Significance of EU Rules

Although the European Union is not at the forefront of AI development, the Union is a leader in setting regulations for global tech giants such as Google and Apple. The EU’s single market has 450 million consumers, making it easier for companies to comply with uniform regulations rather than develop different products for various states.

China

China has adopted a unique approach to AI regulation which caters to small companies and aims to lead the global AI race. The Beijing AI Principles are supported by the Chinese government and tech giants like Alibaba, Baidu, and Tencent. China has also implemented generative AI regulations, such as requiring government approval before releasing AI products.

Global Efforts

Other countries like the United Kingdom, Brazil, Canada, Germany, Israel, and India, are developing national strategies for ethical AI use. However, no country has fully grasped the complexities of AI regulation or how AI will affect all of our lives. The very nature of AI and how it evolves means that regulatory measures will need to be feverishly updated.

While rules and legislation play a vital role in enforcing societal norms, alternative methods can regulate AI research, development, and deployment without stifling innovation. Self-regulating organizations present one such framework where the industry can establish standards and best practices. Sanctions similar to the Financial Industry Regulatory Authority could hold AI researchers and AI merchants accountable.

The ultimate goal of AI regulation is to balance multiple objectives, including, technological innovation, economic interests, national security, and geopolitical considerations. The regulation must consider both current and future perspectives while aligning with existing regulatory frameworks and institutions.

Ginger Liu is the founder of Ginger Media & Entertainment, a Ph.D. Researcher in artificial intelligence and visual arts media — specifically death tech, digital afterlife, AI death and grief practices, AI photography, biometrics, security, and policy, and an author, writer, artist photographer, and filmmaker. Listen to the Podcast — The Digital Afterlife of Grief.