Biometric security and digital forensics take a bashing

In 2017 a Reddit author invented the term deepfake when referring to the synthetic creation of hyperrealistic human persona replicas from photos, audio, and videos. In today’s digital age, the rise of deepfakes presents significant challenges and threats to the authenticity of media content. As deepfake technology continues to advance to the point when no one can detect what is real or generated by artificial intelligence (AI), the potential personal and national security implications of deepfakes are increasingly problematic.

Congressional oversight, defense authorizations, appropriations, and the regulation of social media platforms will be affected by deepfake technology unless new regulations and robust security measures are taken. The trouble is that generative AI and the creation of deepfakes are outsmarting a world that still lingers in the pre-AI age and governments, businesses, and society needs to catch up.

Deepfakes

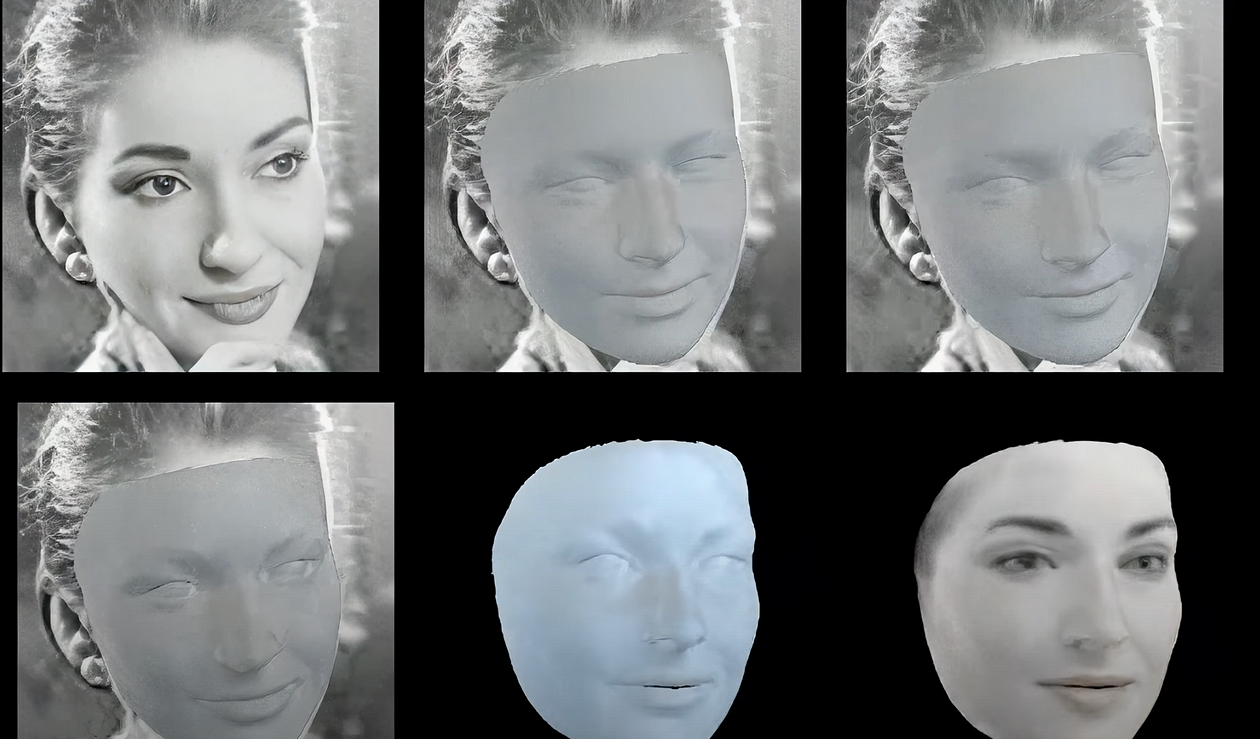

So how are deep fakes actually created? While definitions may vary, the most common method involves the use of machine learning (ML), particularly generative adversarial networks (GANs). Deepfakes are a form of synthetic media created using AI algorithms, which manipulate and alter existing images or videos to convincingly depict someone else’s likeness. Two neural networks, known as the generator and the discriminator, are pitted against each other in a competitive training process. The generator’s task is to produce counterfeit data that closely resembles the original data set, while the discriminator’s job is to identify the fakes. With each iteration, the generator network adjusts to create increasingly realistic data, continuing until the discriminator can no longer distinguish between real and counterfeit information.

Deepfakes are created with programming that maps facial features using key reference points called landmarks. These landmarks include the corners of the eyes and mouth, nostrils, and the contours of the jawline. An enhanced version of this architecture involves using GANs in the decoder. The generator creates new images based on the latent representation, while the discriminator tries to discern whether an image is generated or real. Both algorithms continually improve through a zero-sum game, making deepfakes challenging to counteract. By analyzing a vast collection of images, a GAN can produce a new image that approximates the visual characteristics of the input photos without being an exact replica of any single image. GANs have a wide range of applications, including generating audio and text based on existing examples.

While manipulating media is not a novel concept, the use of AI to generate deepfakes has sparked concern due to their growing realism, speed of creation, and low cost. Freely available software and the ability to rent processing power through cloud computing mean that individuals lacking advanced technical skills can easily access the necessary tools and produce highly convincing counterfeit content using publicly available data. This means that governments, big tech companies, and everyday freelancers can mess with the world order.

In a relatively short time, deepfake technology has developed from the hands of tech companies to individuals with expertise which can have significant political, ethical, security, and social implications. The accessibility of AI technology has the potential for fraudsters to create deepfakes that pose a significant threat to both businesses and individuals.

World News and Historical Events

The credibility of news sources and online media is already a subject of concern when deepfake technology can spread false information and undermine trust. If media can be manipulated or fabricated, it raises the question of whether anything online can be considered real. Photographs, videos, and audio recordings have always documented our understanding of the past. However, deepfakes have the capacity to fabricate people and events and doubt historical facts, such as the Holocaust, the moon landing, and the 9/11 terrorist attacks.

U.S. intelligence concluded that Russia extensively engaged in influencing the outcomes during the 2016 presidential election and harm the electability of Secretary Hilary Clinton. Similarly, in March 2022, Ukrainian President Volodymyr Zelensky revealed that a video posted on social media that showed him ordering Ukrainian soldiers to surrender to Russian forces was a deepfake. While this deepfake was not highly sophisticated, advancements in technology could significantly amplify the impact of malicious influence on political events. The malicious use of deepfake technology includes spreading misinformation, identity theft, propaganda dissemination, cyber threats, manipulation of political elections, financial fraud, reputation damage, and various scams on social media platforms.

Raising the Dead

Deepfakes have also been used to resurrect the dead or at least their likeness. In 2020, the late Robert Kardashian’s likeness was recreated using a combination of deepfake technology and a hologram created by SFX company, Kaleida. In the same year, the likeness of Parkland shooting victim, Joaquin Oliver was recreated with deepfake technology. Oliver’s parents worked in conjunction with the non-profit Change the Ref and McCann Health, highlighting gun-related deaths and gun safety legislation. And in 2022, Elvis Presley digitally appeared during the 17th season of America’s Got Talent.

Biometric Security and Deepfake Fraud

Research conducted by Regula indicates that approximately one-third of businesses have already fallen victim to deepfake fraud attacks. Although neural networks can aid in identifying deepfakes, they should be used with anti-fraud measures that concentrate on physical and dynamic factors. These measures include conducting face liveness checks and verifying document authenticity using optically variable security elements.

Biometric verification is an indispensable part of the process, providing liveness verification to ensure that no malicious actors are attempting to present non-live imagery, such as a mask, printed image, or digital photo, during the verification process. Ideally, biometric verification solutions should also match a person’s selfie with their ID portrait and any databases utilized by the organization to ensure the person’s identity consistency.

However, advanced identity fraud extends beyond AI-generated fakes. In the past year, 46% of organizations worldwide have suffered from synthetic identity fraud. The banking sector is particularly vulnerable to this type of identity fraud, with 92% of companies in the industry viewing synthetic fraud as a genuine threat, and 49% having recently encountered this scam. This particular type of fraud, known as Frankenstein identity, involves criminals combining real and fake ID information to create entirely new and artificial identities and is used to open bank accounts or make fraudulent purchases. To combat the majority of existing identity fraud, companies should implement document verification and comprehensive biometric checks.

Thorough ID verification is crucial when establishing someone’s identity remotely. Companies need to be conducting a wide range of authenticity checks that encompass all security features found in IDs. Even in a zero-trust-to-mobile scenario that involves Near Field Communication (NFC) -based verification of electronic documents, chip authenticity can be reverified on the server side, currently considered the most secure method for confirming the document’s authenticity.

The issue of deepfakes and generative AI continues to be problematic for fraud prevention experts, especially when tech companies like Tencent Cloud have introduced a service called Deepfakes-as-a-Service, which generates digital replicas of individuals based on three minutes of video footage and one hundred spoken sentences. For a fee of $145, users can obtain these interactive fakes within a 24-hour timeframe.

Digital Forensics

Generative AI technology presents a significant threat to digital forensics. Deepfakes represent just the surface of the issue, according to Anderson Rocha, a professor, and researcher at the State University of Campinas. His keynote speech, Deepfakes and Synthetic Realities: How to Fight Back? during the recent EAB & CiTER Biometrics Workshop, Rocha argued that generative AI is challenging long-standing assumptions in forensic data investigations. Rocha emphasizes that while the singularity is still distant, the advancements in AI technology have reached a level where they can appear almost magical or in other words, indistinguishable from the real.

AI has been used in digital forensics to aid in the identification, analysis, and interpretation of digital evidence. It helps in detecting artifacts left behind by any modifications made to a piece of evidence. Detecting subtle manipulations created by AI now requires a combination of detectors and machine learning. Rocha’s team began investigating the authenticity of photographs depicting Brazil’s then-President in 2009, which were published in news media. However, the explosion of data and the rapid progress of neural networks since 2018 have created a headache for forensic experts trying to identify manipulated photos and other types of evidence. Rocha believes that the true threat posed by generative AI lies not only in deepfakes but also in manipulations that leave no detectable artifacts.

Generative AI

Generative AI operates in a multi-modal manner that uses extensive datasets of existing content to create new outputs, such as images or text. Large Language Models (LLM) are a type of AI model trained on vast datasets of text and code. They have the capacity to generate text, translate languages, produce creative content, and provide informed answers to queries. LLMs undergo training through deep learning, which is a subset of machine learning which uses artificial neural networks. These networks can decipher patterns from data, such as medical data from patient histories. By training on large datasets, LLMs learn the statistical relationships between words and concepts.

OpenAI’s ChatGPT and Google AI’s Bard both rely on massive datasets of text and code to generate content, translate languages, produce various types of written materials, and provide responses to questions. ChatGPT uses the Generative pre-trained transformer 4 (GPT-4), while Google’s Bard relies on its bespoke Language Model for Dialogue Applications (LaMDA). Bard can also source real-time information from the internet when formulating responses. ChatGPT’s dataset is limited, for now, to information scraped up until 2021, which means it may not offer real-time or up-to-date information.

Identifying Deepfakes

Advancements in AI technology make it increasingly difficult to identify deepfake media but there are some obvious errors to look out for. Here are ten potential giveaways for spotting deepfake videos:

- Look out for unconvincing eye movements, like the absence of blinking. Replicating natural eye movement and blinking patterns is difficult for deepfake algorithms.

- Awkward facial-feature positioning, such as a nose and chin pointing in opposite directions.

- Deepfake people show a lack of emotion. Deepfakes may exhibit facial morphing, where the displayed emotion does not match the content being conveyed.

- Awkward body movements, such as unnatural body shape, posture, or jerky movements can indicate a deepfake. Deepfake technology typically prioritizes facial features over the entire body.

- Unnatural coloration, like abnormal skin tones, discolored patches, improper lighting, and misplaced shadows are signs that the video content may be artificial.

- Unrealistic hair and teeth, as deepfakes often lack realistic details like frizzy or flyaway hair and the distinct outlines of individual teeth.

- Blurring or misalignment, like blurred edges or misaligned visuals, such as the meeting point of the face and neck.

- Deepfake creators often dedicate more effort to video images than audio. Poor lip-syncing, robotic voices, odd pronunciations, digital background noise, or the absence of audio can be indicators of a deepfake.

- Analyzing a video on a larger screen or using video-editing software to slow down the playback can reveal inconsistencies that are not visible at regular speed. Focusing on details like lip movements can expose faulty lip-syncing.

- Some video creators utilize cryptographic algorithms to insert hashtags at specific points throughout a video, establishing its authenticity.

Advances in generative AI technology are moving at such a rapid pace that the above ten deepfake indicators will be redundant. In response to the potential misuse of generative AI, the Cyberspace Administration of China has implemented regulations that require clear labeling for products produced by AI. The United States and the European Union are suggesting the same regulations.

Ginger Liu is the founder of Ginger Media & Entertainment, a Ph.D. Researcher in artificial intelligence and visual arts media — specifically death tech, digital afterlife, AI death and grief practices, AI photography, biometrics, security, and policy, and an author, writer, artist photographer, and filmmaker. Listen to the Podcast — The Digital Afterlife of Grief.